Estimations#

Bias#

Let \(X_1, \ldots, X_n\) be an i.i.d. sample from some distribution \(F_\theta(x)\). Estimation \(\widehat\theta = \widehat\theta (X_1, \ldots, X_n)\) of \(\theta\) is called unbiased if \(\mathbb E \widehat\theta = \theta\). Otherwise \(\widehat\theta\) is called biased, and its bias equals to

For example, sample average \(\widehat\theta = \overline X_n\) is unbiased estimate of mean \(\theta\) since

Sometimes estimation \(\widehat\theta_n = \widehat\theta(X_1, \dots, X_n)\) is biased, but this bias vanishes as \(n\) becomes large. If \(\lim\limits_{n\to\infty} \mathbb E\widehat\theta_n = \theta\), then estimation \(\widehat\theta_n\) is called asymptotically unbiased.

Consistency#

Estimation \(\widehat\theta_n = \widehat\theta(X_1, \dots, X_n)\) is called consistent if it converges to \(\theta\) in probability: \(\widehat\theta_n \stackrel{P}{\to} \theta\), i.e.,

Due to the law of large numbers \(\widehat\theta = \overline{X}_n\) is a consistent estimation for expectation \(\theta = \mathbb EX_1\) for any i.i.d. sample \(X_1, \ldots, X_n\).

Bias-variance decomposition#

Mean squared error (MSE) of \(\widehat{\theta}\) is

Bias-variance decomposition:

Proof

If \(\lim\limits_{n\to\infty}\mathrm{MSE}(\widehat{\theta}_n) = 0\), then estimation \(\widehat{\theta}_n\) of \(\theta\) asymptotically unbiased and consistent.

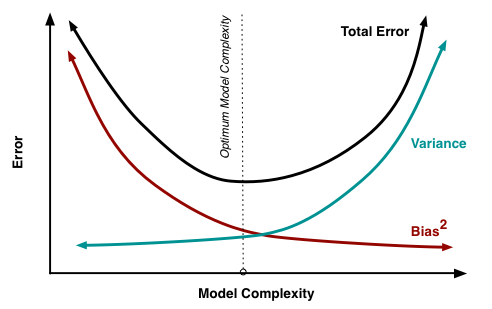

In machine learning bias-variance decomposition is also called bias-variance tradeoff:

Asymptotic normality#

Estimation \(\widehat{\theta}_n\) is asymptotically normal if \(\frac{\widehat{\theta}_n - \theta}{\mathrm{se}(\widehat{\theta}_n)} \stackrel{D}{\to} \mathcal N(0,1)\), i.e.,

If \(X_1, \ldots, X_n\) is an i.i.d. sample from some distribution with finite expection \(\mu\) and variance \(\sigma^2\), then according to the central limit theorem \(\overline X_n\) is asymptotically normal estimation of \(\mu\).

Maximum likelihood estimation (MLE)#

Let i.i.d. sample \(X_1, \ldots, X_n \sim F_\theta(x)\). Правдоподобие (функция правдоподобия, likelihood) выборки \(X_1,\ldots, \ldots X_n\) — это просто её совместная pmf или pdf. Вне зависимости от типа распределения будем обозначать правдоподобие как

Если выборка i.i.d., то функция правдоподобия распадается в произведение одномерных функций:

Оценка максимального правдоподобия (maximum likelihood estimation, MLE) максимизирует правдоподобие:

Поскольку максимизировать сумму проще, чем произведение, обычно переходят к логарифму правдоподобия (log-likelihood). Это особенно удобно в случае i.i.d. выборки, тогда

Properties of MLE

consistency: \(\widehat \theta_{\mathrm{ML}} \stackrel{P}{\to} \theta\);

equivariance: if \(\widehat \theta_{\mathrm{ML}}\) — MLE for \(\theta\) then \(\varphi(\theta)\) — MLE for \(\varphi(\theta)\);

asymptotic normality: \(\frac{\widehat \theta_{\mathrm{ML}} - \theta}{\widehat{\mathrm{se}}} \stackrel{D}{\to} \mathcal N(0,1)\);

асимптотическая оптимальность: при достаточно больших \(n\) оценка \(\widehat \theta_{\mathrm{ML}}\) имеет минимальную дисперсию.

Exercises#

Let \(X_1, \ldots, X_n\) be an i.i.d. sample from \(U[0, \theta]\) and \(\widehat\theta = X_{(n)}\). Is this estimation unbiased? Asymptotically unbiased? Consistent?

Show that estimation \(\widehat{\theta}_n\) is consistent if it is asymptotically unbiased and \(\lim\limits_{n\to\infty}\mathbb{V}(\widehat{\theta}_n) = 0\).

Let \(X_1, \ldots, X_n\) be an i.i.d. sample from \(U[0, 2\theta]\). Show that sample median \(\mathrm{med}(X_1, \ldots, X_n)\) is unbiased estimation of \(\theta\). See also ML Handbook.

Let \(X_1, \ldots, X_n\) be an i.i.d. sample from a distribution with finite moments \(\mathbb EX_1\) and \(\mathbb EX_1^2\). Is sample variance \(\overline S_n\) unbiased estimation of \(\theta = \mathbb V X_1\)? Asymptotically unbiased?

There are \(k\) heads and \(n-k\) tails in \(n\) independent Bernoulli trials. Find MLE of the probability of heads.

Find MLE estimation of \(\lambda\) if \(X_1, \ldots, X_n\) is an i.i.d. sample from \(\mathrm{Pois}(\lambda)\).

Let \(X_1, \ldots, X_n\) be i.i.d. sample from \(\mathcal N(\mu, \tau)\). Find MLE of \(\mu\) and \(\tau\).

Find MLE estimation of \(a\) and \(b\) if \(X_1, \ldots, X_n \sim U[a, b]\).