Unsupervised Learning#

Unsupervised learning refers to a class of machine learning problems where the model is tasked with finding patterns or relationships in data without explicit guidance or labeled examples. Unlike in supervised learning problems, there are no targets \(y_i\), and datasets consist of just feature vectors: \(\mathcal D = \{\boldsymbol x_i\}_{i=1}^n\).

Dimensionality Reduction#

Dimensionality reduction techniques transform high-dimensional data into a lower-dimensional representation while preserving important information. This is useful for:

Visualization of high-dimensional data

Reducing computational complexity

Removing noise and redundant features

Addressing the curse of dimensionality

Common techniques include:

Principal Component Analysis (PCA)

t-SNE (t-Distributed Stochastic Neighbor Embedding)

UMAP (Uniform Manifold Approximation and Projection)

Clustering#

Clustering algorithms group similar data points together based on their features or characteristics. Points within the same cluster should be similar to each other and different from points in other clusters.

Popular clustering algorithms:

K-means clustering

Hierarchical clustering

DBSCAN (Density-Based Spatial Clustering of Applications with Noise)

Gaussian Mixture Models

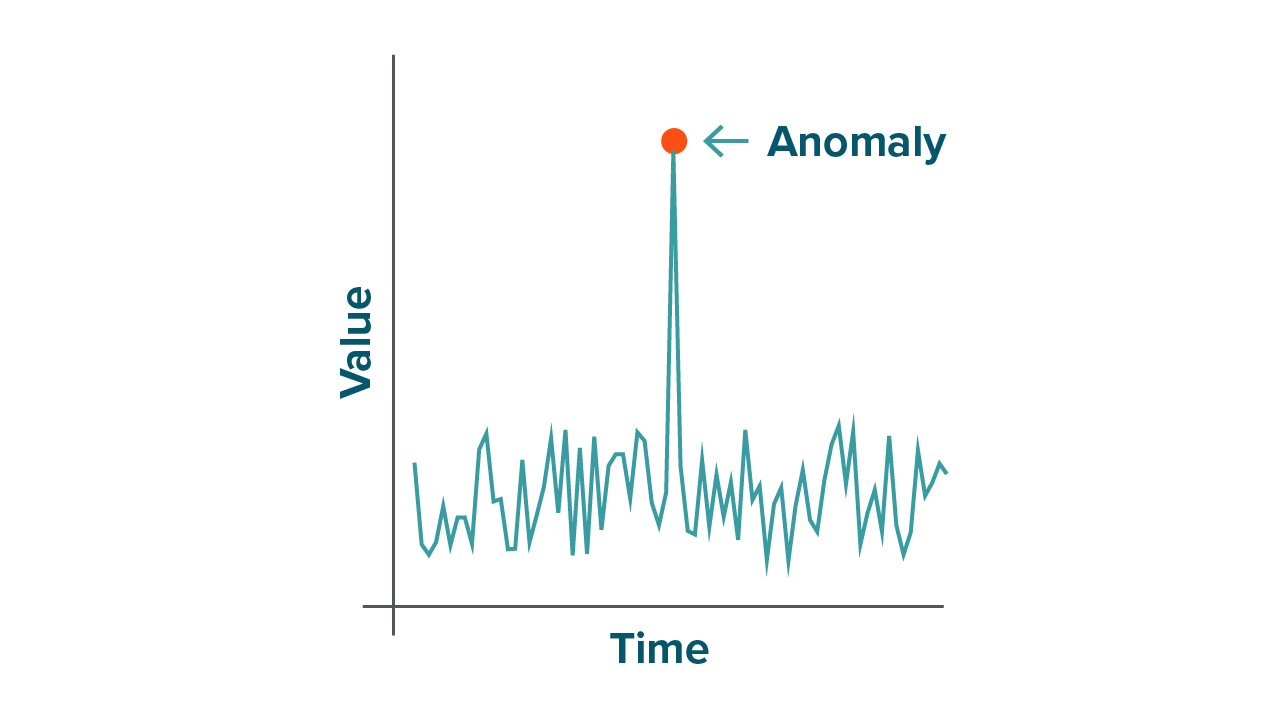

Anomaly Detection#

Anomaly detection (also known as outlier detection) identifies data points that deviate significantly from the expected pattern or behavior. These anomalies might indicate:

Fraud in financial transactions

Manufacturing defects

Network intrusions

Sensor malfunctions

Common approaches:

Statistical methods (z-score, IQR)

Isolation Forest

One-class SVM

Autoencoders

Association Rule Learning#

Association rule learning discovers interesting relationships between variables in large datasets. It’s particularly useful in:

Market basket analysis

Product recommendations

Web usage mining

Cross-selling strategies

Popular algorithms:

Apriori algorithm

FP-Growth

ECLAT

Topic Modeling#

Topic modeling algorithms uncover abstract “topics” that occur in a collection of documents. Each topic is represented as a distribution over words, and each document as a mixture of topics.

Common techniques:

Latent Dirichlet Allocation (LDA)

Non-negative Matrix Factorization (NMF)

Latent Semantic Analysis (LSA)

Applications:

Content recommendation

Document summarization

Trend analysis

Content organization